Situation:

User Testing for Marxent was in its first phases during my first few months working there. I played a large role in structuring consistent and meaningful findings to help inform our product.

User Testing for Marxent was in its first phases during my first few months working there. I played a large role in structuring consistent and meaningful findings to help inform our product.

My role included creating the script, prototyping, moderating the test, collecting results, and helping give direction based on the results. We created scripts to test specific areas of the apps that were being developed and needed quick prototyping in order to better predict the acceptance of each new feature. While some tests included paper prototypes, they also ranged from Adobe XD prototypes to users looking over final developed products.

Task:

Go to offsite locations and get direct feedback from users.

Go to offsite locations and get direct feedback from users.

Action:

The challenges that I faced were trying to create a cohesive testing platform with a lack of experience in the user testing field and a lack of knowledge of the app and its capabilities. This was normal considering I had just started working on the product but it meant I had to do plenty of research on best practices for testing and dive into what our app offered. After many sessions, I began to realize how to ask the right questions and also began to understand what some of the results meant for our platform which in turn helped me guide the team on critical UX decisions.

The challenges that I faced were trying to create a cohesive testing platform with a lack of experience in the user testing field and a lack of knowledge of the app and its capabilities. This was normal considering I had just started working on the product but it meant I had to do plenty of research on best practices for testing and dive into what our app offered. After many sessions, I began to realize how to ask the right questions and also began to understand what some of the results meant for our platform which in turn helped me guide the team on critical UX decisions.

Conducting in-house tests were challenging but the harder task was doing it offsite in a client's location. A lot of apps that we created were made for in-house designers to use, which meant that we would have to go to those locations and track the progress being made. What made the offsite visits harder was the fact that I didn't have all the resources I normally had readily available, as well as, the turnaround time for presenting results was usually cut down to whatever hours I had available the same night because results were to be shown the next day to the client.

Not only was it hard trying to figure out how to conduct the test, but the second hardest thing was also figuring out how to collect all the data and organize it in digestible manner that would be easy to communicate to the corresponding parties.

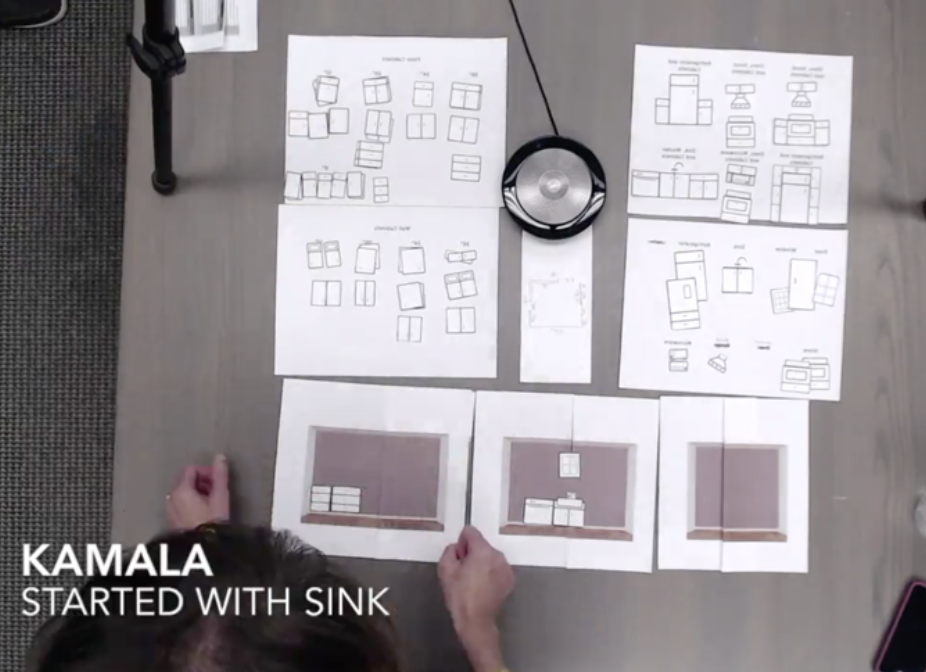

In-house paper prototype and testing

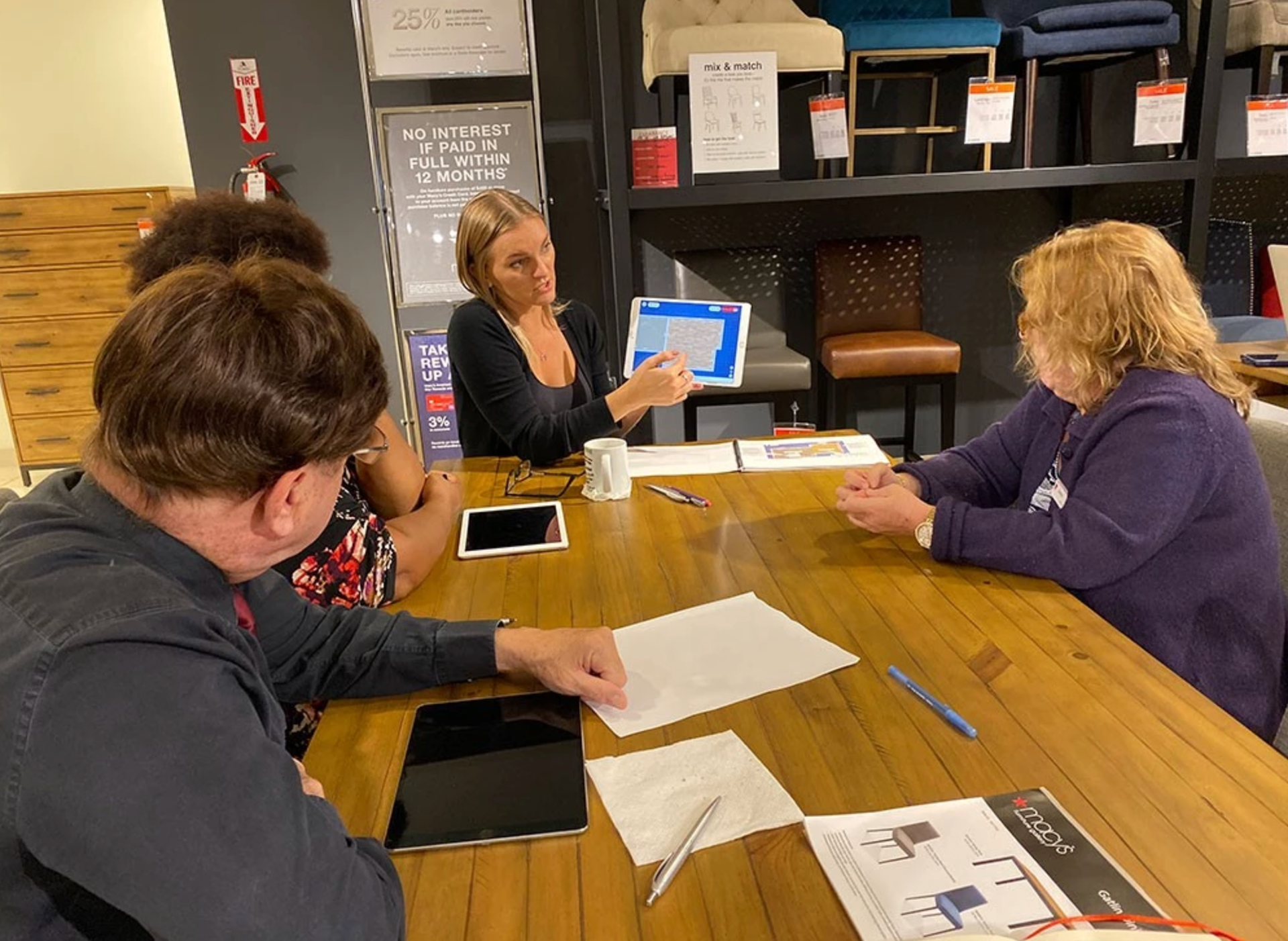

These photos were taken throughout out different offsite feedback sessions. We would meet the different locations using our VR apps, onboard them on how to use the app and get any feedback from the initial setup process.

It all starts with this testing sheet. We create sheets like this in order to get feedback on specific areas of the app. On top of the task sheet, you can see notes taken during the session. Users are to follow the instructions to their best ability as we take notes around where they struggle.

The notes were then taken into a new chart that helps score each individual. Scores are then tallied and anything below a certain value gets marked for further review.

All issues are documented and put into a spreadsheet for prioritization and reviewed by all departments involved. Tickets can then be made accordingly and this sheet can be shown back to the client to clarify progress and overall findings.

Results:

While this is a continued effort that changes with each new test we conduct, we've begun to better understand pain points from the patterns documented through all the testing. The images above are categorized feedback results with ranked results based on priority. Priority is figured out as a group after all feedback is collected.

While this is a continued effort that changes with each new test we conduct, we've begun to better understand pain points from the patterns documented through all the testing. The images above are categorized feedback results with ranked results based on priority. Priority is figured out as a group after all feedback is collected.